MEGA SEO: Building the next generation of blogging with AI workflows

Joe Adams· 10/4/2024 · 10 min read

As a software engineer with over a decade of experience, I've used my fair share of orchestration solutions (Airflow, AWS Step Functions, SQS, etc). But when my co-founder and I started MEGA SEO, we knew we would live or die by our ability to ship, maintain, and iterate quickly on our content pipelines.

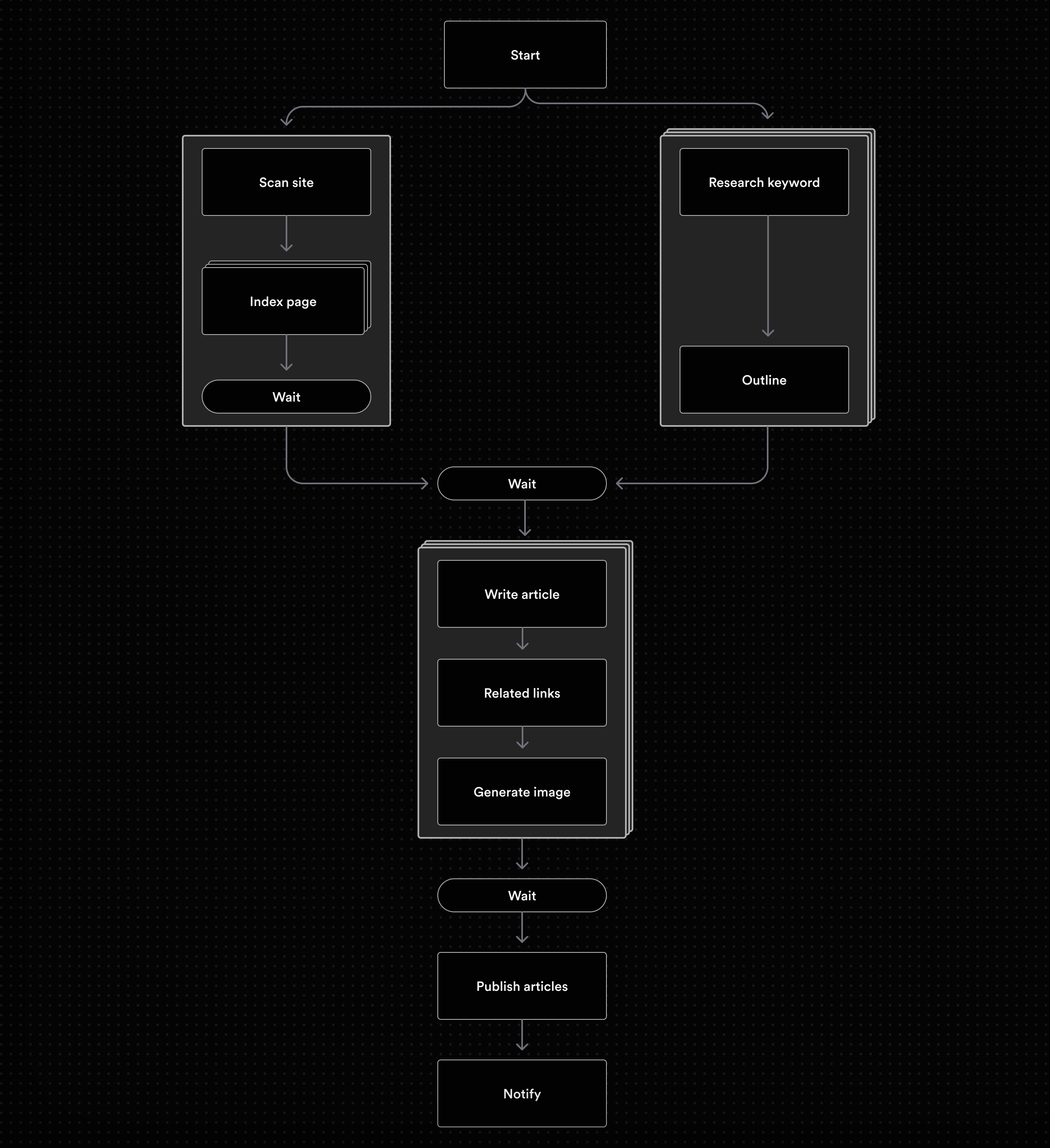

When we set out to build MEGA SEO, our goal was simple: automate blogging using AI. But as any developer knows, "simple" goals often hide complex challenges. To make sure our content was high quality, we needed a robust system to handle everything from indexing websites to generating and publishing content. This required overcoming the complexity of deploying multi-stage workflows, handling errors and API rate limits, all while shipping fast.

Our AI-powered platform handles everything from keyword research to article writing, all tailored to optimize our customer's SEO performance. But here's the kicker: orchestrating this multi-step process isn't child's play. It involves a complex series of steps including: indexing, writing, editing, and publishing, all while maintaining high quality and relevance.

Under the Hood: A Complex Multi-Stage AI Workflow

Picture this: you give MEGA SEO a URL, and then we give you fresh, SEO-optimized articles every day, no prompting needed. Sounds simple, right? But behind the scenes, it's like conducting an orchestra. We crawl your site, understand your content, generate relevant topics, enrich those topics with Search APIs, write high-quality articles, and publish them to your blog in 1 click. All of this while ensuring each piece is SEO-optimized and aligns with your brand voice, and while generating images in your brand style.

The complexity doesn't end there. We're dealing with multiple AI models, each with its own quirks and limitations. We need to manage rate limits, handle failures gracefully, and ensure the entire process is scalable.

Luckily, we found Inngest, which allowed us to tame the chaos. With Inngest, we could break down our complex process into manageable steps, run tasks in parallel, and handle retries and errors.

Inngest's event-based triggers were a perfect fit for our needs. It allowed us to trigger different parts of our workflow based on specific events, making our system more responsive and efficient. For example, when a new article outline is ready, Inngest automatically kicks off the writing process without any manual intervention.

Generating a high-quality, AI-written article can take several minutes. With traditional serverless setups, this would be a nightmare. But Inngest handles these long-running tasks seamlessly, allowing our system to scale.

First Steps: Preparing the Groundwork

Before we could turn loose our AI writer, there was crucial groundwork to be laid.

When a new user signs up, they provide their website URL. Our AI then springs into action, reading through their site to understand their business and target audience. It even suggests two promising keywords to target. This forms the foundation of our content strategy.

// writer_pipeline.ts

export const writerPipeline = client.createFunction(

{ id: "writer-pipeline" },

{ event: Events.WRITER_PIPELINE },

async ({ event, step }) => {

const { keywords, url, targetAudience } = event.data;

// Index the blog | Write blog post outlines

// Write the blog post

// Publish the blog post

// Send notifications

}

);

Indexing the Blog

The first major task is crawling and indexing the client's blog. We use a custom crawler to scan through their existing content, extracting valuable information about their writing style, topics, and SEO patterns.

This data is then stored in a vector database hosted by Pinecone, which allows for fast searches later in the process. Inngest's parallel processing capabilities make this step a breeze, allowing us to index even large blogs quickly and efficiently.

// index_functions.ts

export const indexPage = client.createFunction(

{ id: "index-page" },

{ event: Events.INDEX_PAGE },

async ({ event, step }) => {

const { pageUrl } = event.data;

const page = await step.run("get-page-content", async () => {

return getPageContent(pageUrl);

});

await step.run("index-page", async () => {

return saveToPinecone({ page });

});

}

);

export const indexSite = client.createFunction(

{ id: "index-site" },

{ event: Events.INDEX_SITE },

async ({ event, step }) => {

const { url } = event.data;

const pages = await step.run("find-pages", async () => {

return findPagesToIndex(url);

});

await Promise.all(

pages.map(async (page) => {

return step.invoke(`index-page-${page.url}`, {

function: indexPage,

data: {

pageUrl: page.url,

},

});

})

);

}

);

If we were using a traditional queue system like SQS, this process would be far more cumbersome. We'd have to manually manage the indexing jobs, handle retries, and deal with the complexities of long-running tasks. With Inngest, it's all handled seamlessly.

Writing the Outline

While the indexing is happening, we kick off another crucial step: generating article outlines based on the suggested keywords. This is a two-step process involving keyword research and our outliner agent.

Our AI writer dives deep into the topic, identifying keyword intent, key questions that need answering, and topics not yet covered by existing pages. It's like having a seasoned content strategist working around the clock.

// outliner.ts

export const writeOutline = client.createFunction(

{ id: "write-outline" },

{ event: Events.WRITE_OUTLINE },

async ({ event, step }) => {

const { keyword } = event.data as WriteOutlineRequest;

const research = await step.run("conduct-research", async () => {

return conductResearch(keyword);

});

const outline = await step.run("write-outline", async () => {

return writeOutline(research);

});

return outline;

}

);

Inngest orchestrates this task smoothly, ensuring that the outline generation doesn't interfere with the ongoing indexing process. But here's where it gets really useful: let's say the outlining finishes, but the indexing fails and needs to be retried. With a traditional setup, we might end up regenerating the outline unnecessarily. But Inngest is smarter than that. It only reruns the failed step, saving us time and money – crucial when you're working with expensive AI models.

This level of granular control and efficiency would be a nightmare to implement with SQS. We'd be drowning in a sea of queue management, error handling, and state tracking. With Inngest, it's all handled out of the box, allowing us to focus on perfecting our AI algorithms instead of wrestling with infrastructure.

Putting it together

Inngest makes it easy to compose our two functions. All you have to do is “await” the calls using Promise.all(), like regular Typescript code.

// writer_pipeline.ts

export const writerPipeline = client.createPipeline(

{ id: "writer-pipeline" },

{ event: Events.WRITER_PIPELINE },

async ({ event, step }) => {

const { keywords, url } = event.data as WriterPipelineRequest;

const [siteResult, ...outlineResults] = await Promise.all([

step.invoke("index-site", async () => {

function: indexSite,

data: { url },

}),

...keywords.map(async (keyword) => {

return step.invoke(`write-outline-${keyword}`, {

function: writeOutline,

data: { keyword },

});

})

]);

// Write the article

// Publish the article

// Send notifications

}

);

Creating an Article: Bringing Content to Life

The heart of MEGA SEO's operation lies in its writing agent. Our system juggles several different article-creating workflows, each with its own nuances. All these flows funnel through a unified Inngest function, complete with centralized rate limiting and concurrency management. This approach is crucial for managing our LLM token usage, which has strict per-minute limits. Additionally, it ensures that all writing flows benefit from improvements to the writer agent.

The Writing Agent

The AI-driven writing process transforms our carefully crafted outlines into full-fledged articles. As part of this process, the writer fleshes out the existing research with even more sources from across the web. It contextually embeds links to these sources when constructing the article. The writer is structured to make sure it always produces critical sections that increase value like key takeaways, conclusions and FAQs.

Inngest's step functions allow us to break down the writing process into manageable chunks. This granular control would have been a nightmare to implement with a traditional queue-based system like SQS, where each step would require its own queue and error handling logic.

Finding Related Links

Once the article is written, we leverage our indexed blog content. An agent sifts through the Pinecone vector database, identifying the most relevant articles to link within the new content. This creates a web of interconnected posts, improving SEO performance and user engagement.

The writer then automatically generates a "Related Articles" section, further increasing the value of each piece.

Generate the Image

No article is complete without a captivating image. Our system uses Recraft's AI image generation api to create unique visuals for each post. Recraft is an industry leading AI Image tool, capable of creating everything from beautiful photorealistic images to vector graphics.

Putting it all together

With all those steps, our writer function looks like this:

// writer_agent.ts

export const writeArticle = client.createFunction(

{ id: "write-article" },

{

event: Events.WRITE_ARTICLE,

concurrency: {

limit: 5,

},

rateLimit: {

// Avoid hitting tokens per minute rate limits

limit: 10,

period: "1 minute",

},

},

async ({ event, step }) => {

const { outline, keywords, targetAudience, customerId } =

event.data as CreateArticleRequest;

const article = await step.run("write-article", async () => {

return writeArticle(outline, keywords, targetAudience);

});

const [linkedArticle, image] = await Promise.all([

step.run("insert-links", async () => {

return insertRelatedLinks(article, customerId);

}),

step.run("generate-image", async () => {

return generateCustomImage(article);

}),

]);

return {

content: linkedArticle,

image,

};

}

);

Delivering the Final Product

With the article polished and ready, we move to the final stage: publication.

Once the article is returned from our creation pipeline, we publish it to the customer portal. For our free article offerings, we trigger an email notification to the client. Inngest's event deduplication feature ensures that even if an event is processed multiple times, the email is sent only once. This level of reliability is crucial for maintaining a level of trust with our customers.

The Final Product

The Whole Write Pipeline

With Inngest, orchestrating all of those steps together is straightforward. This code is easy to understand, maintain and iterate on. It's all in a single repository, so it's easy to read and quick figure out the structure and the implementation of different functions.

// writer_pipeline.ts

export const writerPipeline = client.createPipeline(

{ id: "writer-pipeline" },

{ event: Events.WRITER_PIPELINE },

async ({ event, step }) => {

const { keywords, url, targetAudience, customerId } = event.data as WriterPipelineRequest;

const [siteResult, ...outlineResults] = await Promise.all([

step.invoke("index-site", async () => {

function:

}),

...keywords.map(async (keyword) => {

return step.invoke(`write-outline-${keyword}`, {

function: writeOutline,

data: { keyword },

});

})

]);

const articles = await Promise.all(

outlineResults.map(async (outline, index) => {

return step.invoke(`write-article-${index}`, {

function: writeArticle,

data: { outline, keywords, targetAudience},

});

})

);

await step.run("publish-articles", async () => {

return publishArticles(articles);

});

await step.sendEvent(Events.SEND_EMAILS, {

data: {

customerId,

articles,

},

// Deduplication key

id: `send-emails-${customerId}`,

});

return {

siteResult,

articles,

};

}

);

We're big fans

My journey with Inngest began out of exasperation. At my previous company, we built our own queue framework using Amazon SQS. It was a constant headache. We spent countless hours managing queue creation, dead letter queues, performance tuning, and wrestling with timeouts and visibility windows. It was a maze of complexity that took our focus away from building our core product.

When we started MEGA SEO, I knew we needed a better solution. Our product was going to be even more reliant on running workflows at scale. That's when I discovered Inngest.

As I got to use it, I quickly learned that it offers the features of systems like Apache Airflow, but much easier to use and more robust. Everything we need is in one place, from workflow definition to execution and monitoring. This makes it so much faster to understand and improve workflows, letting us ship faster.